Private Data Processing

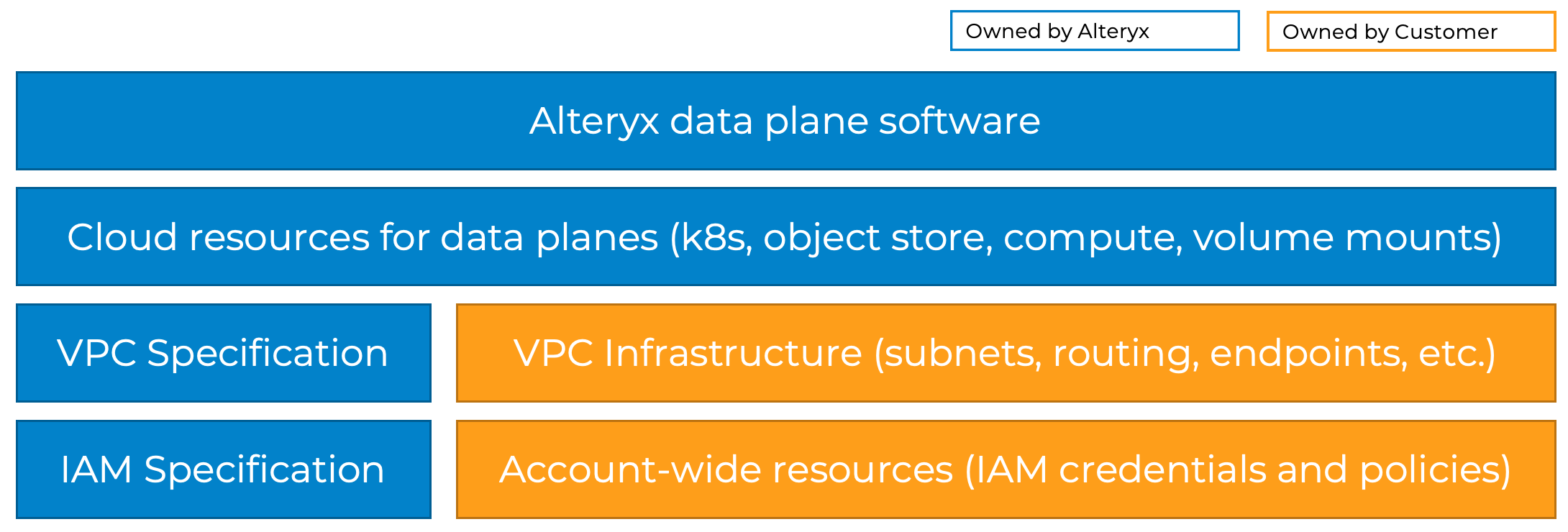

Private data processing involves running an Alteryx One Platform data processing cluster inside of your own virtual private network (VPC) in AWS, Azure, or Google Cloud Platform. This combination of your infrastructure, together with Alteryx-managed cloud resources and software, is commonly referred to as a private compute plane or a private data processing environment.

Set Up Private Data Processing for Your Cloud Provider

Use these guides to set up private data processing for your cloud service...

Cloud Resources

Alteryx One uses automated provisioning pipelines with infrastructure as Code (IaC) to create and maintain these resources for you. Alteryx One uses Terraform Cloud to manage this. Terraform is an IaC tool that lets you define and manage infrastructure resources through human-readable configuration files. Terraform Cloud is a SaaS product provided by Hashicorp. Private data handling resources are created and managed with a set of Terraform files, Terraform Cloud APIs, and private Terraform Cloud agents running on Alteryx infrastructure.

The full list of cloud resources varies depending on which apps you enable within your private data processing environment. The resources might include…

Object Storage: Base storage layer for files (For example, uploaded datasets, job outputs, data samples, caching. and other temp engine files).

IAM Roles and Policies: Necessary permissions to provision cloud resources and deploy software.

Kubernetes: Runs the VM instances for some Alteryx One services and jobs in the data plane.

Compute (Virtual Machines): Compute resources required to run jobs and services.

Secret Manager: Storage of infrastructure secrets.

Redis: Service-to-service messaging within the VPC.

Shared File System: Network attached storage.

Spark Processing: (If enabled) Processing of large data jobs.

The specific services used vary by public cloud provider as follows:

Service | AWS | Azure | GCP |

|---|---|---|---|

Object Storage | S3 | Blob Storage | Google Storage |

IAM Roles and Policies | IAM Roles IAM Policies | IAM Roles IAM Policies | IAM Roles |

Kubernetes | EKS | AKS | GKE |

Compute (Virtual Machines) | EC2 | Virtual Machines | Compute Instance |

Secrets Management | Secret Manager | Key Vault | Secret Manager |

Redis | Amazon MemoryDB | Azure Cache | Google MemoryStore |

Shared File System | EFS | Azure Files | Google Filestore |

Spark processing | Serverless EMR | N/A | N/A |

Cloud Apps

Alteryx One runs a number of jobs and services inside the private data processing environment. The exact combination of infrastructure and software depends on which Alteryx One applications you deploy there. These modules make it possible to only deploy the cloud resources and software that you need for the applications that you want to run.

Each application has a defined package that consists of…

Required permissions.

Required network setup that includes subnets and IP ranges.

Alteryx-managed cloud resources.

Alteryx-managed software.

For example, if you want to only deploy Designer Cloud, there are specific permissions and subnets (with IP ranges) that you are required to set up ahead of time. After you perform the setup, you can sign in to Alteryx One and begin the deployment process.

If you want to only deploy Cloud Execution for Desktop, there is a different set of required permissions and subnets and a different box to check in Alteryx One when you perform the deploy.

If you want to deploy both packages into the same private compute plane, you must complete both sets of setup steps, then complete the deploy step for both.

Designer Cloud Package

When you deploy the Designer Cloud package, Alteryx One provisions these cloud resources.

Required Services

You can find the exact service names for each cloud provider in the Cloud Resources section.

Object Storage

Kubernetes

Compute

Secret Manager

Redis

Shared File System

(Optional) Spark Processing

Node Groups and Types

Within the Kubernetes cluster, Alteryx provisions these compute resources for each cloud provider. These node types and priorities might change over time as the cloud provider evolves. For now, Alteryx strikes a balance between a few factors…

AMD machine types are less expensive than Intel machine types.

Some job types run best with memory-optimized or compute-optimized nodes. However, for some cloud providers, these node types are much more expensive, while the general purpose types are more affordable.

AWS allows Alteryx to specify a priority order of node types and provisions them as needed in priority order. Alteryx recommends this order: memory-optimized AMD machine types, then fall back to Intel machine types, then general purpose machine types.

Node Group Type | AWS | Azure | GCP |

|---|---|---|---|

| t3a.2xlarge t3.2xlarge | Standard_D2s_v3 | n2d-standard-2 |

| r6a.2xlarge r6i.2xlarge m6a.4xlarge m6i.4xlarge | Standard_B16as_v2 | n2d-standard-16 |

| Same as | Same as | Same as |

| Same as | Same as | Same as |

| Same as | Same as | Same as |

The convert, data-system, file-system, and photon node groups have a minimum scale set of 1 and a maximum of 30.

Software

Within the Kubernetes cluster, the Designer Cloud package uses both on-demand jobs and long-running services.

Kubernetes On-demand Jobs

For Kubernetes on-demand jobs, Alteryx One retrieves a container image (from cache or from a central store) and deploys it within an ephemeral pod that lasts for the duration of the job. All executables are in Java or Python.

conversion-jobs: Convert datasets from 1 format to another as needed within a workflow.

connectivity-jobs: Connect to external data systems at runtime.

photon-jobs: Photon is an in-memory prep and blend runtime engine at runtime for smaller dataset sizes.

amp-jobs: AMP is an Alteryx in-memory prep and blend runtime engine utilized primarily in Designer Experience.

publish-jobs: Write processed data to the output destination specified within the workflow.

Kubernetes Long-running Services

Alteryx uses Argo CD to deploy and maintain long-running services in your Kubernetes cluster. Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes.

Most long-running services in the cluster serve a utility function to allow Alteryx to monitor cluster health, scale the cluster up and down, and import/export secrets to and from the cloud-native key store and the Kubernetes secret store. These services are common to all packages that utilize Kubernetes and only 1 instance of these services will ever run at a time, even when you specify multiple packages that require them.

teleport-agent: Sets up a secure way for Alteryx SRE to connect to the cluster for troubleshooting. Alteryx One pulls the helm chart from the https://charts.releases.teleport.dev repository. Alteryx doesn't scan this third-party image.

datadog-agent: Collects logs and metrics from the cluster. Alteryx One pulls the helm chart from the https://helm.datadoghq.com repository. Alteryx doesn't scan this third-party image.

keda: Auto-scaling of long-running services based on custom metrics with kafka support. Alteryx doesn't scan this third-party image.

external-secrets: Import/export between AWS Secret Manager or Key vault secrets to and from Kubernetes Secrets Store. Alteryx doesn't scan this third-party image.

cluster-autoscaler: Scale EKS, AKS, or GKE nodes based on pod demand. Alteryx doesn't scan this third-party image.

metrics-server: Allow EKS, AKS, or GKE to use the metrics API. Alteryx doesn't scan this third-party image.

kubernetes-reflector: Replication of the

dockerConfigJsonsecret across all namespaces. Alteryx doesn't scan this third-party image.

The Designer Cloud package also deploys long-running services that service specific needs.

data-service: Connects to external data systems at design-time via the JDBC API. Alteryx developed this service. Snyk scans the image for vulnerabilities.

Nota

Alteryx occasionally needs to update the required permissions or network configuration as cloud applications evolve. Since you own these components, Alteryx will notify you of the required changes. You’ll have 60 days to make the updates, after which Alteryx will push out the new application versions. If you have not completed the required action by then, you may experience degradation or disruption of the data processing in your private environment.

Cloud Execution for Desktop Package

When you deploy the Cloud Execution for Desktop package, Alteryx One provisions these cloud resources.

Required Services

The Cloud Execution for Desktop package doesn't utilize Kubernetes. Instead, the package deploys a machine image that contains all the necessary software to execute Designer Desktop workflows. As such, the package only uses the compute service from each cloud provider. The exact service names for each cloud provider are in the Cloud Resources section.

Compute

Autoscale Groups and Node Types

Cloud Execution for Desktop deploys 2 or more virtual machines in an autoscale group.

These node types and priorities might change over time as the cloud provider evolves. For now, Alteryx strikes a balance between a few factors…

AMD machine types are less expensive than Intel machine types.

AWS allows Alteryx to specify a priority order of node types and provisions them as needed in priority order. Alteryx recommends this order: memory-optimized AMD machine types, then fall back to Intel machine types, then general purpose machine types.

AWS | Azure | GCP | |

|---|---|---|---|

Node Type | m5a.4xlarge | Standard_B16as_v2 | n2d-standard-16 |

Software

On a virtual machine, the Cloud Execution for Desktop package runs a few utility services for monitoring as well as the engine workers that process Designer Desktop jobs.

cefd-worker: These workers run the Alteryx in-memory engine to initiate connections to data sources, process data, and publish job outputs. Jobs are containerized and run inside a container in the virtual machine.

consumer-service: This service consumes messages from a Kafka queue that is fed by an Alteryx One service in the control plane. These messages are the trigger to run a workflow.

teleport-agent: Sets up a secure way for Alteryx SRE to connect to the cluster for troubleshooting. Alteryx One pulls the helm chart from the https://charts.releases.teleport.dev repository. Alteryx doesn't scan this third-party image.

datadog-agent: Collects logs and metrics from the cluster. Alteryx One pulls the helm chart from the https://helm.datadoghq.com repository. Alteryx doesn't scan this third-party image.

Machine Learning Package

When you deploy the Machine Learning package, Alteryx One provisions these cloud resources.

Required Services

You can find the exact service names for each cloud provider in the Cloud Resources section.

Object Storage

Kubernetes

Compute

Secret Manager

Redis

Shared File System

(Optional) Spark Processing

Node Groups and Types

Within the Kubernetes cluster we provision the following compute resources for each cloud provider. These node types and priorities may change over time as the cloud provider evolves. For now we strike a balance between a few factors:

AMD machine types are less expensive than Intel machine types

Some job types run best with memory-optimized or compute-optimized nodes, but in some cloud providers, these node types are much more expensive, while the general purpose ones are much more affordable

AWS allows us to specify a priority order of node types, it will provision them as needed in priority order. We prefer memory-optimized AMD machine types, then fall back to Intel machine types, then general purpose machine types.

Node group type | AWS | Azure | GCP |

|---|---|---|---|

| t3a.2xlarge t3.2xlarge | Standard_D2s_v3 | n2d-standard-2 |

| r6a.2xlarge r6i.2xlarge m6a.4xlarge m6i.4xlarge | Standard_B16as_v2 | n2d-standard-16 |

The automl node group has a minimum scale set of 1 and a maximum of 30.

Software

Within the Kubernetes cluster, the Machine Learning package uses both on-demand jobs and long-running services.

Kubernetes On-demand Jobs

For Kubernetes on-demand jobs, Alteryx One retrieves a container image (from cache or from a central store) and deploys it within an ephemeral pod that lasts for the duration of the job.

automl-jobs: Job service for model training and execution.

Kubernetes Long-running Services

Alteryx uses Argo CD to deploy and maintain long-running services in your Kubernetes cluster. Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes.

Most long-running services in the cluster serve a utility function to allow Alteryx to monitor cluster health, scale the cluster up and down, and import/export secrets to and from the cloud-native key store and the Kubernetes secret store. These services are common to all packages that utilize Kubernetes and only 1 instance of these services will ever run at a time, even when you specify multiple packages that require them.

teleport-agent: Sets up a secure way for Alteryx SRE to connect to the cluster for troubleshooting. Alteryx One pulls the helm chart from the https://charts.releases.teleport.dev repository. Alteryx doesn't scan this third-party image.

datadog-agent: Collects logs and metrics from the cluster. Alteryx One pulls the helm chart from the https://helm.datadoghq.com repository. Alteryx doesn't scan this third-party image.

keda: Auto-scaling of long-running services based on custom metrics with kafka support. Alteryx doesn't scan this third-party image.

external-secrets: Import/export between AWS Secret Manager or Key vault secrets to and from Kubernetes Secrets Store. Alteryx doesn't scan this third-party image.

cluster-autoscaler: Scale EKS, AKS, or GKE nodes based on pod demand. Alteryx doesn't scan this third-party image.

metrics-server: Allow EKS, AKS, or GKE to use the metrics API. Alteryx doesn't scan this third-party image.

kubernetes-reflector: Replication of the

dockerConfigJsonsecret across all namespaces. Alteryx doesn't scan this third-party image.

Auto Insights Package

When you deploy the Auto Insights package, Alteryx One provisions these cloud resources.

Required Services

You can find the exact service names for each cloud provider in the Cloud Resources section.

Object Storage

Kubernetes

Compute

Secret Manager

Redis

Shared File System

Node Groups and Types

Within the Kubernetes cluster we provision the following compute resources for each cloud provider. These node types and priorities may change over time as the cloud provider evolves. For now we strike a balance between a few factors:

AMD machine types are less expensive than Intel machine types

Some job types run best with memory-optimized or compute-optimized nodes, but in some cloud providers, these node types are much more expensive, while the general purpose ones are much more affordable

AWS allows us to specify a priority order of node types, it will provision them as needed in priority order. We prefer memory-optimized AMD machine types, then fall back to Intel machine types, then general purpose machine types.

Node group type | AWS | Azure | GCP |

|---|---|---|---|

| t3a.2xlarge t3.2xlarge | Standard_D2s_v3 | n2d-standard-2 |

| r6a.2xlarge r6i.2xlarge m6a.4xlarge m6i.4xlarge | Standard_B16as_v2 | n2d-standard-16 |

The common-job node group has a minimum scale set of 1 and a maximum of 30.

Software

Within the Kubernetes cluster, the Auto Insights package uses both on-demand jobs and long-running services.

Kubernetes On-demand Jobs

For Kubernetes on-demand jobs, Alteryx One retrieves a container image (from cache or from a central store) and deploys it within an ephemeral pod that lasts for the duration of the job.

Auto Insights Jobs are orchestrated by Airflow.

data-uploader: Job service for ingestion of datasets from VFS into ClickHouse.

Kubernetes Long-running Services

Alteryx uses Argo CD to deploy and maintain long-running services in your Kubernetes cluster. Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes.

Most long-running services in the cluster serve a utility function to allow Alteryx to monitor cluster health, scale the cluster up and down, and import/export secrets to and from the cloud-native key store and the Kubernetes secret store. These services are common to all packages that utilize Kubernetes and only 1 instance of these services will ever run at a time, even when you specify multiple packages that require them.

teleport-agent: Sets up a secure way for Alteryx SRE to connect to the cluster for troubleshooting. Alteryx One pulls the helm chart from the https://charts.releases.teleport.dev repository. Alteryx doesn't scan this third-party image.

datadog-agent: Collects logs and metrics from the cluster. Alteryx One pulls the helm chart from the https://helm.datadoghq.com repository. Alteryx doesn't scan this third-party image.

keda: Auto-scaling of long-running services based on custom metrics with kafka support. Alteryx doesn't scan this third-party image.

external-secrets: Import/export between AWS Secret Manager or Key vault secrets to and from Kubernetes Secrets Store. Alteryx doesn't scan this third-party image.

cluster-autoscaler: Scale EKS, AKS, or GKE nodes based on pod demand. Alteryx doesn't scan this third-party image.

metrics-server: Allow EKS, AKS, or GKE to use the metrics API. Alteryx doesn't scan this third-party image.

kubernetes-reflector: Replication of the

dockerConfigJsonsecret across all namespaces. Alteryx doesn't scan this third-party image.

The Auto Insights package also deploys long-running services that service specific needs.

clickhouse: An open-source column-oriented database management system for the storage of Auto Insights Datasets. The Helm chart is developed by Alteryx and uses a third-party image from https://hub.docker.com/r/clickhouse/clickhouse-server. Alteryx doesn't scan this third-party image.

altinity-clickhouse-operator: An open-source Kubernetes Operator for the purpose of managing the deployment of ClickHouse. The Helm chart is developed by Alteryx and is based on Kubernetes resources from https://github.com/Altinity/clickhouse-operator/blob/master/deploy/operator/clickhouse-operator-install-bundle.yaml. It uses a third-party image from https://hub.docker.com/r/altinity/clickhouse-operator. Alteryx doesn't scan this third-party image.

airflow: An open-source workflow management service for the purpose of orchestrating on-demand Jobs for Auto Insights, such as the ingestion of datasets into ClickHouse. Alteryx One pulls the helm chart from https://github.com/apache/airflow/tree/main/chart. In order to install additional packages, Alteryx manages and scans a fork of the third-party image from https://hub.docker.com/r/apache/airflow.

query-engine: An application for ingesting Auto Insights Datasets into the Private Data Plane and executing queries on those Datasets from the Control Plane.

Business Continuity

Private data processing environments are available in regions that have at least 3 availability zones. This allows the private data processing environment to run in 2 availability zones and failover to the third.

Backups for the private object storage are your responsibility.

Depending on the job type, data processing jobs run either in an ephemeral pod in a Kubernetes cluster or in a container in a virtual machine. If an outage affects an actively running job, it is likely the job will fail and you will need to rerun it.

Supported Regions

In order to run a private data processing environment in a particular region, Alteryx One has these requirements…

The region must have 3 or more availability zones.

The region must provide the necessary cloud resources as described in the Cloud Resources section.

The region must provide the necessary node types as described in the Cloud Apps section.

Here are the available regions for each cloud provider:

Cloud Global Region | Region | AWS | Azure | GCP |

|---|---|---|---|---|

Africa | Johannesburg, South Africa | southafricanorth | ||

Asia Pacific | Delhi, India | asia-south2 | ||

Hong Kong | ap-east-1 | eastasia | asia-east2 | |

Indonesia | asia-southeast2 | |||

Mumbai, India | ap-south-1 | asia-south1 | ||

Pune, India | centralindia | |||

Osaka, Japan | asia-northeast2 | |||

Seoul, South Korea | ap-northeast-2 | koreacentral | asia-northeast3 | |

Singapore | ap-southeast-1 | southeastasia | asia-southeast1 | |

Sydney, Australia | ap-southeast-2 | australiaeast | australia-southeast1 | |

Taiwan | asia-east1 | |||

Tokyo, Japan | ap-northeast-1 | japaneast | asia-northeast1 | |

Europe | Belgium | europe-west1 | ||

Berlin, Germany | europe-west10 | |||

Finland | europe-north1 | |||

Frankfurt, Germany | eu-central-1 | germanywestcentral | europe-west3 | |

Gävle, Sweden | swedencentral | |||

Ireland | eu-west-1 | northeurope | ||

London, United Kingdom | eu-west-2 | uksouth | europe-west2 | |

Madrid, Spain | europe-southwest1 | |||

Milan, Italy | europe-west8 | |||

Netherlands | westeurope | europe-west4 | ||

Oslo, Norway | norwayeast | |||

Paris, France | eu-west-3 | francecentral | europe-west9 | |

Stockholm, Sweden | eu-north-1 | |||

Turin, Italy | europe-west12 | |||

Warsaw, Poland | polandcentral | europe-central2 | ||

Zurich, Switzerland | switzerlandnorth | |||

Middle East | Qatar | qatarcentral | ||

United Arab Emirates | uaenorth | |||

North America | Arizona | westus3 | ||

California | us-west2 | |||

Iowa | centralus | us-central1 | ||

Montreal, Canada | ca-central-1 | northamerica-northeast1 | ||

Toronto, Canada | canadacentral | |||

Nevada | us-west4 | |||

North Virginia | us-east-1 | |||

Ohio | us-east-2 | us-east5 | ||

Oregon | us-west-2 | us-west1 | ||

South Carolina | us-east1 | |||

Texas | southcentralus | |||

Utah | us-west3 | |||

Virginia | eastus | us-east4 | ||

eastus2 | ||||

Washington | westus2 | |||

South America | São Paulo, Brazil | sa-east-1 | brazilsouth | southamerica-east1 |